def GaussJacobi(A, b, x, x_solution, tol): The second implementation is based on this article.

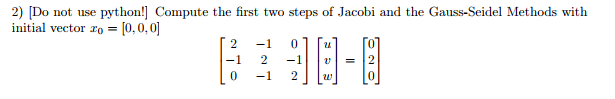

X_new = np.zeros(N, dtype=np.double) #x(k+1) The first implementation is what I originally came up with import numpy as npĭef GaussJacobi(A, b, x, x_solution, tol): dev.When implementing the Gauss Jacobi algorithm in python I found that two different implementations take a significantly different number of iterations to converge.

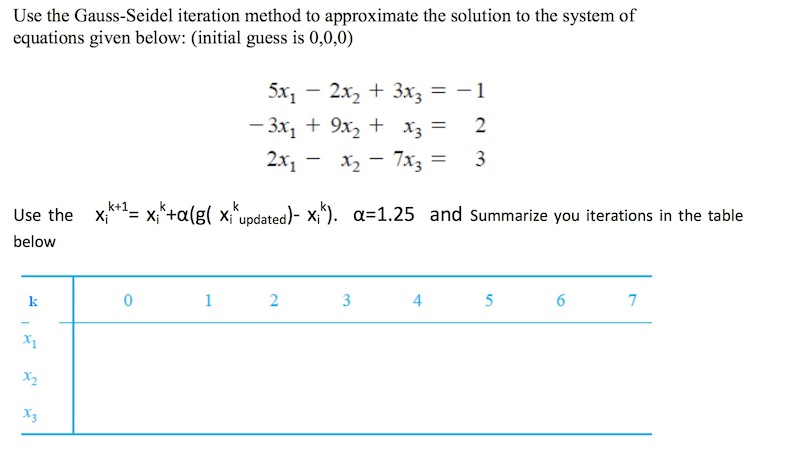

of 7 runs, 10,000 loops each)Ĩ.34 µs ± 937 ns per loop (mean ± std. (but this example is too small to be effective). bicgstab will be much faster than GS with increasing size. For larger systems and more, the iterative solvers are more suitable. Your GS solver is nicely implemented! BUT: Is it important, that you run your own GS sover? If not, then the different scipy solvers are usually much faster than GS, even with stricter convergence criterias.įor this small equation system here, the direct solvers np.linalg.solve() and () are up to 10 to 20 times faster than GS. WITH TOLERANCE = 0.0000001 Solution = with 10 iterations Solution = gauss_seidel(A, b, x_0, tolerance=0.001)į'Solution = iterations') Here's my python code: import numpy as npĭef gauss_seidel(A, b, x_0, max_iterations=15, tolerance=0.0000001):Įrror = (diff, ord=np.inf, axis=None) / \ When I don't use the error in the code (only iterations) I obtain the same result as the book. I think the problem is because of infinity norm using scipy to calculate the error. The problem is I obtain the exact solution but with more iterations: 10 iterations with 0.0000001 tolerance but the book obtains the solution with only 6 iterations and 0.001 tolerance. I'm implementing the code and an example from the book: 'Numerical Analysis: Burden and Faires'. I have a python code to solve linear systems with Gauss-Seidel Method, using Numpy and Scipy.

0 kommentar(er)

0 kommentar(er)